Artificial Intelligence: Control it or Go with the flow

Ford is reviewing its self-driving car plans, and that could include pushing back the planned launch of its first fully-autonomous production car beyond 2021, Ford CEO Jim Hackett indicated in a recent interview1:

When asked whether he expects a complete shift to self-driving cars, or whether people will continue to be able to own drive and drive their own cars, Hackett replied, "it's the latter." Hackett also indicated that self-driving cars won't completely replace human drivers, and that shared fleets won't do away with private car ownership. …….We don't know that autonomous vehicle intelligence in the future will all be delegated to a service that no one owns but everyone uses,"……. "It could play a role in vehicles that people own, vehicles that aren't supposed to crash. You're buying the capability because of the protection it gives you. It's also possible it could be applied in these big, disruptive ways that of course, we're not blind to, but my bet is we don't know."

Ford will have to keep pushing the development of autonomous cars, but Hackett's more sensible attitude may be just what Ford needs to keep things in perspective. Why is his view sensible? Not so long ago, Daniel Pink published a nicely animated presentation on YouTube* explaining the history of motivational theories. He concluded that beyond a certain level, money is a dissatisfier and that Challenge, Mastery, and Making a Contribution is what truly drives people. In other words, a meaningful activity that you control and challenges you is deeply inspiring.

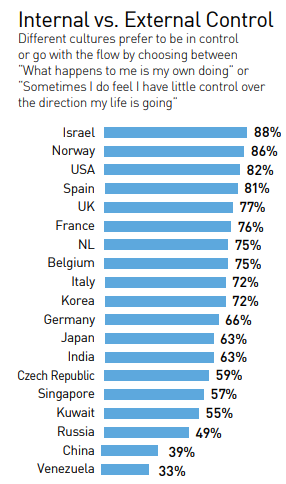

Mastery: ëControlí vs. ëGo with the flowí. Since self-mastery was one of the most important motivational sources, we must be concerned about the challenges it creates when introducing Artificial Intelligence, as is used in the self-driving car. This orientation or “locus of control” is concerned with relationships and how we perceive our environment. Every culture has developed an attitude towards the (natural) environment. Survival has meant acting with or against it. The way people relate to their environment, internalistically or externalistically, is linked to the way they seek to have control over their own lives and over their destiny.

Internalistic people tend to have a mechanistic view of nature. They see nature as a complex machine, and Externalistic people have a more organic view of nature. Mankind is one of nature’s forces, so they should operate in harmony with the environment. Humans should subjugate themselves to nature and go along with its forces. Externalistic people do not believe that they can shape their own destiny. “Nature moves in mysterious ways”, and therefore one never knows what will happen. The actions of externalistic people are “outer-directed”, and adapted to external circumstances. Russians, Arabs, and Singaporeans are notably externally controlled. When the temperature is too low, they adjust by putting on a sweater: you change yourself, not the environment.machines can be controlled if you have the right expertise. Internalistic people do not believe in luck or predestination. They are “inner-directed”. One’s personal resolution is the starting point for every action. You can live the life you want to live if you take advantage of the opportunities. People can dominate nature, if they make the effort. Many Israeli and Anglo-Saxon people, for example, are highly internally controlled. So if the temperature is felt as too high, they adjust the thermometer of the aircon: you change the environment.

With the ever-growing influence of Artificial Intelligence, Robotics, and profiling algorithms that suggest what products to buy or what TV movies to watch, this motivational paradigm can be challenged — in the way people perceive ‘mastery’ and ‘making a contribution’. But who is making the contribution? Do we still master the outcome?

Some cynics might argue that AI is just old wine in a new bottle. We have more data and speedier computers but in essence, the producer is ultimately a human individual or team and in full control of its output. In many instances, the cynics are right. We know the source code algorithms and data mining are not new. But what if our robots develop the kind of “intelligence” in that they start learning and we can’t follow how and what it has learned. Are we still in control or aren’t we?

This ‘control’ vs. ‘go with the flow’ tension leads to many contradictions. The general conflicts manifest themselves as follows:

- The dauntless entrepreneur vs. Public benefactor

- Originate products vs. Refine products

- Bureaucratic hierarchy vs. Organic hierarchy

- Quick to invent vs. Quick to adopt

- Technology Push vs. Market pull

The manifestations of such tensions have shown in many implementations of AI too. Let’s take the interesting “autocorrect” function on our smartphones. Is it handy or isn’t it? The answer depends on the mood. Sometimes we are delighted that we avoided a typo and other times we are irritated that it distorted the meaning of what we wanted to communicate.

Let’s take the following business case as an example. A French production manager had to take a difficult decision on what machine to buy. He compared many alternatives and ended up shortlisting two types of machines — one fully automated with the latest intelligent software from Germany and the best in terms of performance, stability, and price. The alternative was a Belgian machine that scored quite well on all the evaluation criteria but lower than the German machine. It had one major difference in the design in that it had a keyboard with a screen where the operator had the option to change the operational parameters for improving and fine tuning its performance. With the German machine that was done automatically by the AI algorithms. The French manager chose the Belgian machine with the simple argument that his operators liked to be in ultimate control of the functioning of the machine. The German argument was that it was empirically proven that the software outperformed any intervention from a human. The latter argument didn’t make a lasting impression on the French manager. The rest is history.

A further example is related to the auto-pilot discussions at Boeing and Airbus. The Guardian announced in the beginning of this year that Boeing and other plane manufacturers are exploring single pilot planes to cut costs. “Cargo planes are likely to be first on the single-pilot trial but passenger jets could follow if there is public support. Once there were three on the flight deck. Then the number of flight crew fell to two when the Boeing 757 changed the way cockpits were designed in the 1980s. Now, jetmakers are studying what it would take to go down to a single pilot, starting with cargo flights. The motivation is simple: saving airlines tens of billions of dollars a year in pilot salaries and training costs if the change can be rolled out to passenger jets after it is demonstrated safely in the freight business.”

The reactions on the introduction of AI in the cockpit were culturally biased. The French, knowing very well that 60 percent of all plane crashes are caused by human error, would never allow the computer taking over the last power of decision to what the planes do; whereas other cultures were much more relaxed.

So, what do the cases tell us? They inform us that all the dilemmas raised are human and beyond any cultural set of values and norms. But the way we approach the dilemma of ‘control’ vs. ‘go with the flow’ are very much cultural. So what do we do with auto-correct to make it successful around the globe? We first of all need to allow people to switch it on or off. Something like what MS word and autocorrect does. You’re not in control as far as the hints are concerned but in control to use it. That works for every culture. The same could apply to the self-driving cars and self-flying planes. You give the driver/pilot serious hints when passing a white line or flying towards a turbulent area and leave it to them to correct their course. This will work in any culture because it is reconciled.

AI in HR

Can we apply the same methodology to make the introduction of artificial intelligence work in HR across the globe? Sure we can. The types of dilemmas they are facing clearly depend on the level in which AI is implemented. Let’s distinguish three levels2:

Assisted Intelligence

Technology is already widely available today and improves what people and organizations are doing by automating repetitive, standardized, and time-consuming tasks and providing assisted intelligence as in diagnostic instruments as MBTI or cultural scans. Examples prevalent in change management today are apps that help you measure the gap between current and ideal culture, or helping in a Cultural Due Diligence process through an M&A App. You can also use the sophisticated Culture for Business App where tips you get in managing, negotiating and meeting in 140 countries depend on either the country from which you come or your individual value profile. There are no real dilemmas here. You use the assistance offered or you don’t. For the new versions of cars, it means a built-in GPS where the driver is in full control to use it or not.

Augmented Intelligence

Emerging technology brings a fundamental change in the nature of work by enabling humans and machines collaborate and take decisions together. It makes us do things we couldn’t otherwise do. For example, blended learning programs exist because of the combination of face-to-face activities and digital programs. AI powers and directs this. Uniquely human traits such as emotional intelligence, persuasion, creativity, innovation become more valuable by this co-existence of man and machine.

However, the dilemmas that are diagnosed by the apps need to be worked on and reconciled through a face-to-face dialogue as both the meaning of the digital diagnosis becomes deeper and the face-to-face dialogues become more productive. It is the Hi-tech/Hi-touch reconciliation that is strived for. The way this dilemma is approached depends very much on the culture. In the USA, it is easy to start with the app followed by a workshop; and in most of Asia, it is more effective to start with a workshop and plug in the app.

Another good example of augmented intelligence is the value-based recruitment app1 that we developed for some large international organizations. It measures the profile of an applicant’s values and compares it with the organization’s values (either current or desired). Furthermore, it measures the degree to which the applicant is willing and able to reconcile the differences presented by the gap. This type of machine learning is a synergistic exercise between humans and machines. Machine learning in practice requires human application of scientific methods and human communication skills. Successful organizations have the analytic infrastructure, expertise, and close collaboration between analytics and business subject matter experts. For the semi self-driving car, augmented intelligence means that the driver feels some resistance at the steering wheel when getting closer to the demarcation lines, but still has the control over it when moving the steering wheel to the area where he needs to go. And in some systems, to activate the direction indicator relieves you from the resistance.

Autonomous intelligence

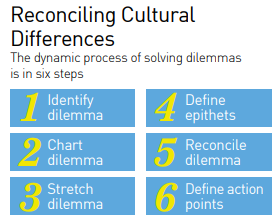

Autonomous intelligence is the most advanced form of technology that relies on AI, establishing machines or other digital devices that act on their own and reach out to the subconscious level of information. An example of this will be full self-driving vehicles when they come into widespread use. But when algorithms autonomously take over decision-making and selection processes, they create data ethics, privacy, and data security issues and also give rise to a new industry of data science and data-governance. In the field of HR, this type of sophisticated use is best illustrated by an app that we are developing which comes close to deep learning by networking of intelligence. The example is called the Dilemma Reconciliation Network App. The dilemmas that are raised by the users in M&As, Change processes, Risk management etc. are linguistically analyzed and rank-ordered in importance by an algorithm. The top five are sent back to the participants (up to 50,000 is possible) and asked for their preference. The top one is chosen and participants get points for their rank-order and how close they are with the final rank-order. The participants follow the next five steps as presented in the graph.

At every step, participants’ opinions are gathered, compared, and validated by themselves and points are given to those who are closest to the final choices. The algorithms, combined with linguistic analyses, not only help make the choices and enhance the quality of the dialogues but also learn from the previous choices made. At the end of the day, reconciliations are found by idea crowdsourcing that outperforms the outputs that we get through augmented intelligence. And there is more support for the implementation of the outcome because more participants are involved.2

In short, the meta dilemma of ‘control’ vs. ‘go with the flow’ has been reconciled in all three levels. It is the culture that decides where you take the starting point of the reconciliation. And systems that don’t reconcile dilemmas of this nature are artificial and not intelligent.

I've been (we've all) been hacked. Coca-Cola, Amazon, FB etc. are all racing to hack you. Not your smartphone, not your computer, not your bank account. You might have heard we are living in the era of hacking computers, but that's hardly the truth, in fact we are in the era of hacking humans.... They are watching what you buy, where you go, who you meet. If the algorithms understand you better than you know yourself, authority will shift to them. Consumers who are externally controlled might curtail to these approaches but not those amongst us who are internally controlled. Not 'Love Island', not 'Get me out of here', there is a danger we will all be living in 'The Truman Show'. It is those organizations that can reconcile their AI push with consumer controlled pull will be those who will enjoy a sustainable future.

If you want to know more about AI, then join us for People Matters TechHR Conference on 28th February 2019 at Marina Bay Sands, Singapore. Register Now!